Ridge Regression

Ridge regression also knows Tikhonov regularization. Ridge regression helps weights to keep minimal and makes the learning algorithms fit the data. The main motive is to penalize significant coefficients for loss functions, and the model intent to avoid large coefficients. Tune the hyperparameters in ridge regression to regularize the model. Alpha relates to weights. If alpha=0, then it depicts Linear Regression. On the other hand, if the alpha in considerable value then weights close to 0, then the flat line goes towards the data’s mean. So tuning the alpha hyperparameter gets the best outcome of ridge regression.

Scikit Learn library:

from sklearn.linear_model import Ridge

Lasso Regression:

The abbreviation of Lasso Regression is Least Absolute Shrinkage and Selection Operator Regression. It is the same as Ridge regression. However, it uses the L1 regularization. The main characteristic of Lasso that it reduces the weights of the essential features. Another advantage of using Lasso regression, it automatically carries feature selection which is non-zero, and outputs a sparse model.

Scikit Learn library:

from sklearn.linear_model import Lasso

Further Reading

Posts on Artificial Intelligence, Deep Learning, Machine Learning, and Design Thinking articles:

Rasa X Open Source Conversational AI UI Walk-through

Artificial Intelligence Chatbot Using Neural Network and Natural Language Processing

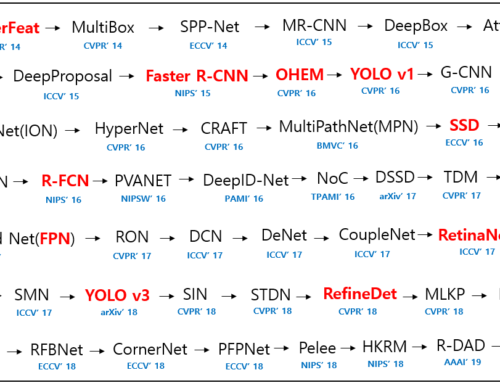

Pre-trained Models for Transfer Learning

EMNIST Dataset Handwritten Character Digits

Posts on SAP:

SAP AI Business Services – Document Information Extraction

SAP AI Business Services: Document Classification

SAP Intelligent Robotic Process Automation, Use Case, Benefits, and Available Features

A simple wireframe design for SAP FIORI UI Chatbot

Simplified SAP GTS Customs Export/Import Documentation with SAP Event Management

Leave A Comment