Most of the time, we will not have enough training data for our projects. The project half of the time spent only on collecting data. It is a considerable task and effort for us. Tensorflow, Keras, and Pytorch provide pre-trained models for transferring learning.

The one suggestion that we can often reuse the lower layers of an existing pre-trained model and use it in our project.

What is a pre-trained model in simple terms?

You want to learn stock market fundamentals and like to make a day trade every day. However, you do not know how the share market works, and you heard that 95% of people who go to day trade fails. Now, you hired a share market guru, and he/she wants you to train to become a pro in day trade. He/she well-trained share market guru, and now they agree to transfer knowledge what they well trained. They have basics, intermediate and advanced levels of share market that will move to novice traders.

The advantage of using Transfer Learning that it requires less time to train a model as it pre-trained.

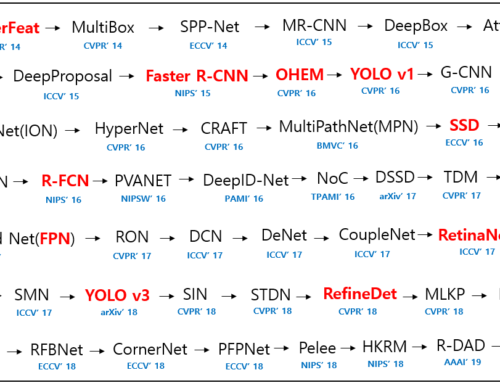

Tensorflow has many pre-trained models, which I posted in my previous blogs VGG16, VGG19, ResNet50, MobileNetV3, Xception, NASNetMobile, InceptionV3.

We can use those pre-trained models in our projects, and below are a few modules and functions for pre-trained models.

Tensorflow Keras Modules:

mobilenet_v3 module: MobileNet v3 models for Keras

resnet50 module: ResNet50 models for Keras.

vgg16 module: VGG16 model for Keras.

vgg19 module: VGG19 model for Keras.

xception module: Xception V1 model for Keras.

To view the complete list of modules, click here.

Tensorflow Keras Functions:

MobileNetV3Small(…): Instantiates the MobileNetV3Small architecture.

ResNet50(…): Instantiates the ResNet50 architecture.

VGG16(…): Instantiates the VGG16 model.

VGG19(…): Instantiates the VGG19 architecture.

Xception(…): Instantiates the Xception architecture.

To view the complete list of functions, click here.

Leave A Comment